AGISF - Task decomposition for scalable oversight

Week 4 of the AI alignment curriculum. Scalable oversight refers to methods that enable humans to oversee AI systems that are solving tasks too complicated for a single human to evaluate. Basically divide and conquer.

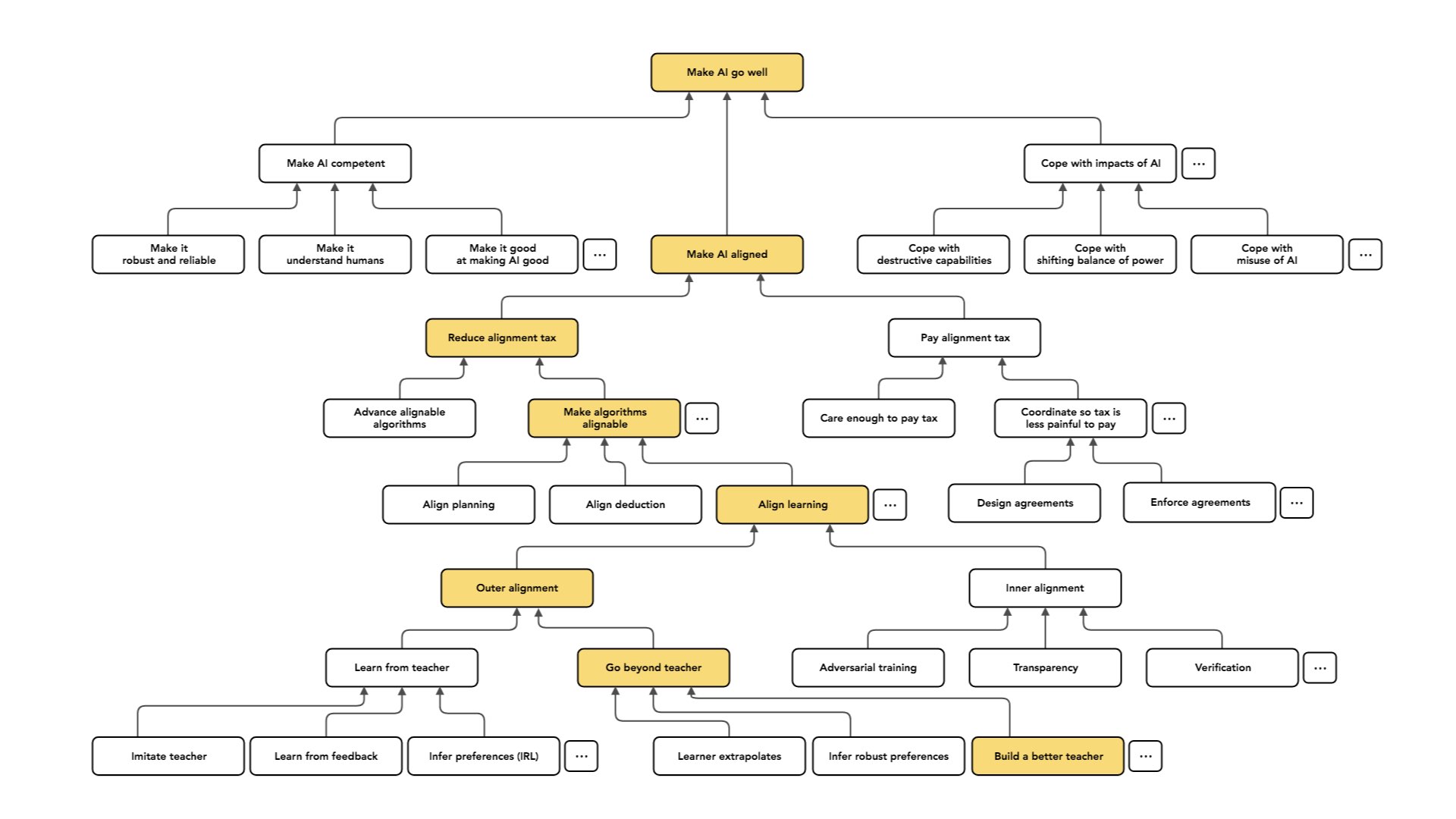

AI alignment landscape (Christiano, 2020)

- Intent alignment -> getting AIs to want to do what you want them to do

- Paul isn’t focused on reliability (how often it makes mistakes**, hopes it’ll get better along with capabilities

- Well meaning != understands me. Sort of the source of most comedies of errors?

- Paul also isn’t focused on how AIs will be used. AI governance is a separate can of worms

- Main focus is on reducing alignment tax

Inner vs Outer

- Outer alignment -> finding an objective that incentivizes aligned behavior

- Inner alignment -> making sure that the policy we ended up with is robustly pursuing the objective that we used to select it

landscape graph

Measuring Progress on Scalable Oversight for Large Language Models (Bowman, 2022)

- Sandwiching is done by having average humans try to get a model to answer domain specific questions etc. as

correctly as possible, without checking external sources. Their answers are then checked by an expert who evaluates their

performance. The idea is that the levels of knowledge are

avg humans<models<experts. This allows to sort of simulate alignment tests with a way of checking how well they did.

Comments

- Sounds like an interesting way to check out strategies that assume the AI will be better than its overseers at its task

- Still limited by the basic problems, but nice to have in a swiss cheese security model

Summarizing books with human feedback (Wu et al., 2021)

- It’s easier to sum up smaller chunks of text (e.g. chapters)

- Summing up a group of summaries is easier, as it’s less text, and probably only the most important bits

Comments

- Mistakes will get amplified -> founder effect

- Will weight all parts the same? Not all parts of text are equally important

Supervising strong learners by amplifying weak experts (Christiano et al., 2018)

- divide harder tasks into smaller, easier ones

- train a model to solve the smaller ones

- divide even harder tasks into smaller, only hard ones

- train the previous model to solve hard problems

- ??? (iterate)

- profit

Comments

- How will the AI know how to split the harder tasks up?

Language Models Perform Reasoning via Chain of Thought (Wei et al., 2022)

- Asking LLMs to explain their chain of thought results in more correct answers

Comments

- Sort of System 1 vs System 2?

- Could be a good model of heuristics/intuitions?

Least-to-most prompting enables complex reasoning in large language models (Zhou et al., 2022)

- Bases its answers on previously seen questions that are subsets of the current question?