AGISF - Artificial General Intelligence

Questions

- how long till models start autofeedbacking, like AlphaZero did? This suggests that it’s already happening

- the general timeline seems to be AGI around 2050 - how accurate is that?

Core readings

Four Background Claims - (Soares, 2015)

General intelligence is a thing, and humans have it

The alternative view is that intelligence is just a collection of useful modules (speech, dexterity, etc.) that can be used in different contexts to solve stuff. This seems to track the nurture vs. nature debate?

Response

This wouldn’t explain how humans can learn new stuff so quickly.

Impact

If AGI is just a collection of tools, then it’s mainly a matter of not giving dangerous ones (hehe). Or at least in principle it should be possible to forsee possible actions spaces? If there is some kind of general G factor, then AGI development is pretty much a search to implement it - at which point there is takeoff?

Comments

- what is intelligence? The more I read, the less I know

- pure nature is obviously wrong, as rewiring the auditory cortex shows that the ferrets can learn to see with the auditory cortex

- the existence of various cortexs, broca’s areas etc. suggest that there is a certain amount of hardwiring involved in human intelligence

- a lot of (human) intelligence seems to be instincts and gut reactions, which pretty much are static tools

AI systems could become more intelligent than humans

Brains are magical

Quantum level simulations should in theory allow to recreate a brain in silico, so either there is a soul powering intelligence, or it has a materialistic interpretation. That got deep, fast… That being said, human intelligence might be hard to achieve, but why assume it’s the only type possible?

General intelligence is really hard/complicated/magical

Most animals are intelligent to a certain degree, so the basics are unlikely to be that hard. Unless it’s something like eucariotic cells. Ant hills or flocking behaviours can “learn” and result in quite complicated patterns, but stem from simple rules. This seems different.

Humans are peak intelligence

He. Funny. Humans have constraints that a purely intelligent being wouldn’t have. Which suggests that even if humans are on the Pareto frontier, there is still a lot of extra gains to be made by beings that can focus on being intelligent, rather than using intelligence as a tool to achieve other goals.

Impact

There doesn’t seem to be any intrisical limit to how intelligent systems can be.

Intelligent systems will shape the future

I’d rephrase this to “the most capable systems will shape the future**. In the same sense as survival of the fittest. Either because they’re simply better at whatever they’re supposed to do (e.g. controlling and monitoring large amounts of data), being generally more capable (e.g. having an equivalent of IQ 300, or being 1000x faster), or by not having to bother with as many distractions (e.g. how psychopaths find it easier to get into positions of power).

To a certain extent this will be true even if they AI isn’t in charge, as the infrastructure etc. will change to better utilise its strengths. The same way as buildings need to be build around pipes.

Impact

This is fine, as long as the systems result in futures that are nice. Otherwise this seems bad, for a whole list of reasons:

- side effects - wildernesses get paved over with server farms (very unlikely, but still)

- loss of agency - why bother doing anything if the system will do it better? Though people still make artisianal knicknacks, so that should be fine. There will be fewer blacksmiths, but on the other hand, many more people can do what they want, rather than are needed to?

- dystopian lock-ins - if a future is chosen that is Bad, it’ll be hard to get out of it. Same as how there are only a few native reserves left

Not beneficial by default

This seems a lot less controversial now, but that could be a function of my bubble. Humans tend to be empathetic etc. to other tribe members. This seems like an inbuilt mechanism, but also seems to appear in other herd animals. Which is totally logical, seeing as you don’t want them killing each other. This behaviour tends to be automatic. People become more tolerant in proportional to how well off society is, but that seems to be more a matter of expanding the definition of ones tribe to all of humanity/animals/whatever. So it’s not that they come to the logical conclusion that it’s best to do so, but more by redirecting their preexistent circuitry.

An argument could be made that it’s good to be nice, so that the humans don’t turn you off. But that isn’t the only reaction. Pretending to be nice until you’re powerful enough to kill your enemies is a true and tried method to achieve power.

AGI safety from first principles (Ngo, 2020)

This is mainly about humans potentially becoming a second (class** species, if the following 4 points happen:

- AIs will be superintelligent

- they’ll be autonomous and agentic

- their goals will be misaligned by default

- the above combination will result in them gaining control of humanity’s future

Definition of intelligence

Intelligence is the ability to achieve goals in a wide range of environments.

This seems extremely game-able…

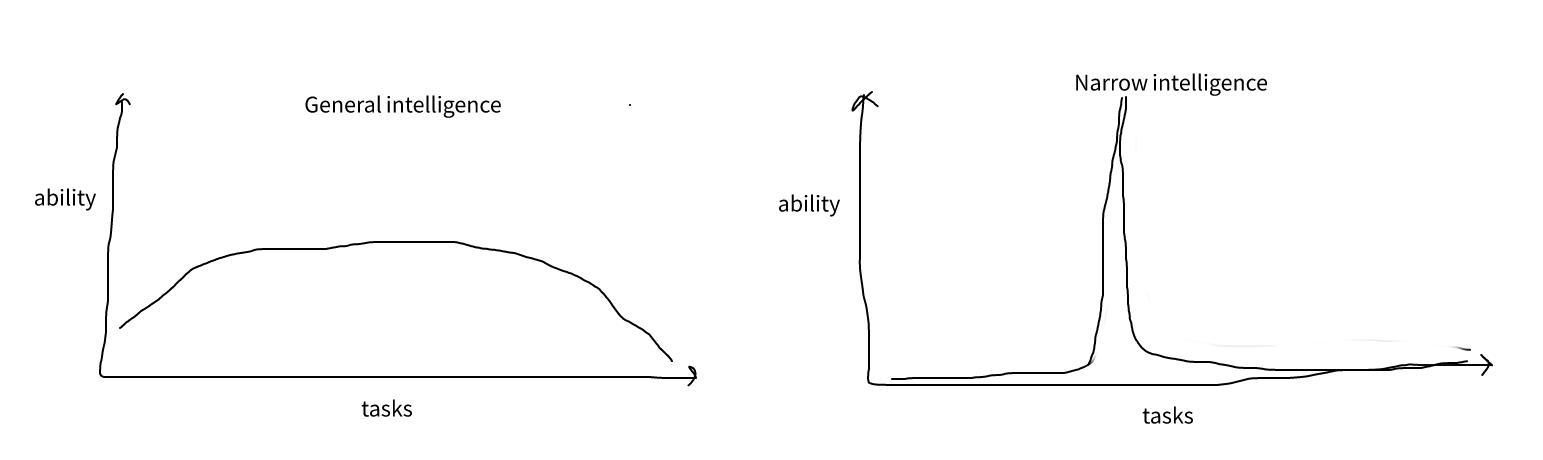

Narrow vs general intelligence

- Narrow is very good at one task, e.g. AlphaGo, but can’t do anything else

- General is good at a wide variety of tasks, using previously learned techniques to solve novel problems

Narrow isn’t just general focused on one very specific thing - otherwise it could be transfered to other tasks and learn them as well. Or can they?

General seems harder, as it needs a lot more training to approach narrow on narrow’s tasks. Sort of like:

Visualizing the deep learning revolution (Ngo, 2022)

Gives a list of systems that show how fast things are going:

- vision, from MSINT to video generation

- games, from pong to go, to minecraft

- NLP, from weird stuff, through GPTs to google PaLM explaining jokes

- various stuff - codex/copilot, alphafold, google MINERVA (math solver)

Creating a Space Game with OpenAI Codex (OpenAI, 2021) (video)

This was astounding. Like living through the mother of all demos. I need to get me some codex access.

Future ML systems will be qualitatively different (Steinhardt, 2022)

Emergent behaviours are a thing. In the case of AI, more data/compute tend to cause interesting things to start happening:

- deep learning - on small scales it struggles, but with more resources is a lot better than alternatives. Pretty much like choosing a database? Or hashmap vs list?

- few shot learning

- grokking

Impact

The basic engineering approach of most MLers of extrapolating current trends is probably not a good idea, seeing as massive changes happen every couple of years that turn everything upside down. Hence philosophical argument can be quite useful.

Biological Anchors: A Trick That Might Or Might Not Work (Alexander, 2022)

Various mumbo-jumbo and fermi estimates to come up with the traditional date of ~2050. Which is suspiciously 30 years in the future… The general conclusion is that no one really knows when, but pretty soon, so be prepared. Sort of like the second coming?

Intelligence explosion: evidence and import (Muehlhauser and Salamon, 2012)

Basically says why AIs are better, and what will happen if they get power:

- Increased computational resources - computer networks can scale up

- Communication speed - axons signals work at 75 m/s, as opposed to the speed of light in the case of electricity. This means that computers can literally think faster

- Increased serial depth - humans brains are massively parallel, because it can do max 100 serial steps. Computers don’t have that problem

- Duplicability - programs/weighs/data can be copied, so it’s trivial to clone working AIs

- Editability - humans are a lot harder to modify than computer data

- Goal coordination - 1000 copies of the same AI will probably work together, or at least more so that 1000 different people

- Improved rationality - feelings and such. This I have vague doubts about…

Impact

Humans to superintelligences will be as apes are to humans. Cute, maybe, but irrelevant and can be removed if in the way.

Further Readings

Success stories

Collection of GPT-3 results (Sotala, 2020)

Twitter collection of various astounding things done by GPT-3. Old hat now, which in its own way is terrifying…

CICERO: an AI agent that negotiates, persuades, and cooperates with people (Bakhtin et al., 2022)

Diplomacy bot. Zvi says nothing to see here, move along. Mainly done by having a bot that seems human and talks with everyone.

AGI

Three Impacts of Machine Intelligence (Christiano, 2014)

The impacts are:

- Growth will accelerate, probably significantly - your basic exponential advancement, via the industrial revolution

- Human wages will fall, probably very far - demand and supply. When robots can do everything better, why pay? Paul hopes that humans will still be well off, even if they don’t work

- Human values won’t be the only thing shaping the future - pretty much the same as the previous articles

AI: racing towards the brink (Harris and Yudkowsky, 2018)

Too long :/

Most important century (Karnofsky, 2021)

TL;DR, but my understanding is that it’s a combination of:

- growth can’t keep growing exponentionally

- founder effects, since all future actions have to flow through today - the future will be defined by what is done with it now

Scaling

The Bitter Lesson (Sutton, 2019)

General method + lots of compute will beat other approaches.

The bitter lesson is based on the historical observations that

- AI researchers have often tried to build knowledge into their agents

- this always helps in the short term, and is personally satisfying to the researcher

- in the long run it plateaus and even inhibits further progress

- breakthrough progress eventually arrives by an opposing approach based on scaling computation by search and learning The eventual success is tinged with bitterness, and often incompletely digested, because it is success over a favored, human-centric approach.

One thing that should be learned from the bitter lesson is the great power of general purpose methods, of methods that continue to scale with increased computation even as the available computation becomes very great. The two methods that seem to scale arbitrarily in this way are search and learning.

The second general point to be learned from the bitter lesson is that the actual contents of minds are tremendously, irredeemably complex; we should stop trying to find simple ways to think about the contents of minds, such as simple ways to think about space, objects, multiple agents, or symmetries. All these are part of the arbitrary, intrinsically-complex, outside world. They are not what should be built in, as their complexity is endless; instead we should build in only the meta-methods that can find and capture this arbitrary complexity. Essential to these methods is that they can find good approximations, but the search for them should be by our methods, not by us. We want AI agents that can discover like we can, not which contain what we have discovered. Building in our discoveries only makes it harder to see how the discovering process can be done.

AI and efficiency (Hernandez and Brown, 2020)

It’s not just more compute being available, the underlying algorithms are also getting better. So the available compute (or cost per FLOP, or whatever) is going up, and at the same time the amount of compute needed is going down. Concerning!

2022 expert survey on progress in AI (Stein-Perlman, Weinstein-Raum and Grace, 2022)

The contacted ~4000 experts. The median is 35 years to AGI. So pretty much standard.

AI Forecasting: One Year in (Steinhardt, 2022)

People consistently underestimate AI progress. Both the general public and ML researchers. By quite a bit. The lesson here is to greatly update in the direction of things going faster.

Large language models can self-improve (Huang et al., 2022)

Whoops! AI self improvement! Would like to see more on this topic.